|

A power struggle over AI rules quietly defined November 2025. While the United States moved to centralize artificial intelligence regulation and supercharge national AI infrastructure, the European Union, India, and other jurisdictions pushed ahead with more structured, risk‑based frameworks that will shape how AI products are built, audited, and shipped worldwide. For tech leaders, founders, and policy teams, these AI policy developments are no longer background noise—they are now core strategic variables in product roadmaps, compliance programs, and infrastructure bets.

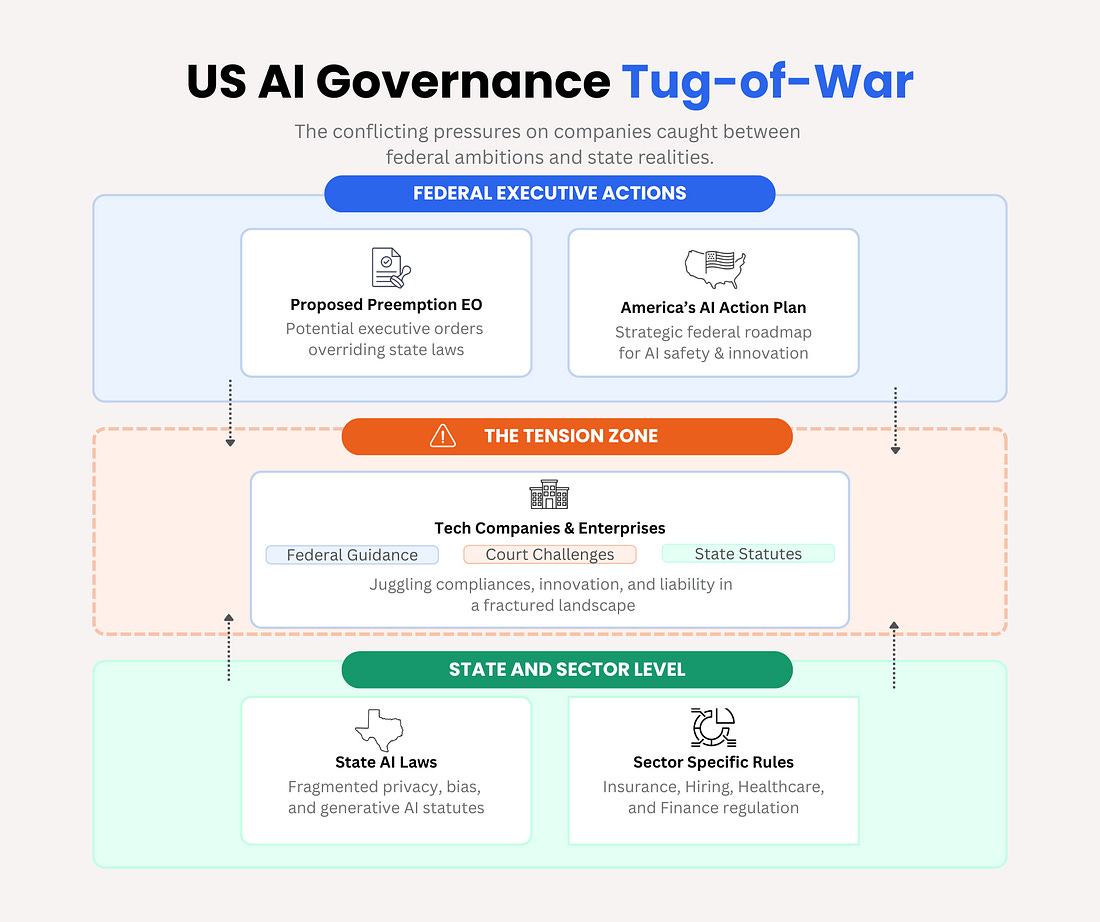

US AI regulation: Federal vs. state showdown

The Trump administration signaled an aggressive push to preempt state AI laws with a potential executive order aimed at curbing state‑level regulations on deepfakes, AI transparency, and public‑sector procurement. Draft proposals described tools such as directing the Justice Department to challenge state AI laws, tying some federal funding to alignment with a more permissive national framework, and framing state rules as a barrier to “American leadership in AI”.

For AI companies, this raised immediate strategic questions: rely on a future single federal AI standard, or keep investing in compliance for an already fragmented state landscape that includes deepfake disclosure rules and sector‑specific AI statutes in areas like hiring and consumer protection. Even without a final order signed in November, the episode underscored that US AI governance may swing toward innovation‑first preemption, even as courts and states continue to test their authority.

“Genesis Mission”

Alongside the preemption fight, the White House advanced an affirmative AI strategy via the “Genesis Mission” executive order, which directs federal agencies to build a shared AI supercomputing ecosystem for science and innovation. The order tasks the Department of Energy and partner agencies with creating large‑scale AI infrastructure, using exascale systems and national labs, and explicitly calls out collaboration with major hardware players like Nvidia , Dell , Hewlett Packard Enterprise (HPE) , and AMD to accelerate AI‑driven scientific discovery.

For research institutions, cloud providers, and AI startups, the Genesis Mission signals that “AI for science” is now a core part of US industrial policy, not just a research trend. It also ties into the broader America’s AI Action Plan, which emphasizes public‑private partnerships, responsible AI research, and using federal compute resources as leverage in the global AI race.

EU AI Act: From principles to enforcement

In Europe, November was about turning the EU AI Act from a legal text into an operational regime that product and compliance teams actually have to follow. New guidance and commentary clarified how “general‑purpose AI” and “high‑risk AI systems” will be treated, reinforcing a structure where certain use cases—like social scoring—are banned, while high‑risk applications in finance, employment, healthcare, and critical infrastructure face strict requirements for documentation, human oversight, robustness, and transparency.

Regulators and industry frameworks also emphasized convergence with standards like ISO/IEC 42001 (AI management systems) and the NIST AI Risk Management Framework, meaning that AI compliance is drifting toward the same maturity expectations as information security or privacy programs. For global SaaS and enterprise AI vendors, this pushes them toward integrated AI governance: model‑risk registers, traceability, data‑provenance tracking, and continuous monitoring instead of one‑off “ethics reviews”.

India’s AI governance guidelines and emerging‑market rules

India moved forward with its own AI governance blueprint, positioning itself between the US innovation‑first approach and the EU’s more prescriptive model. The India AI Governance Guidelines are framed around seven “sutras” such as people‑centricity, accountability, fairness, and explainability, and envision a governance stack that includes a Technology and Policy Expert Committee, regulatory sandboxes, and mechanisms for reporting AI incidents.

Instead of starting with sweeping bans, India is leaning on a sector‑agnostic but risk‑sensitive model that will be tailored for domains like finance and healthcare, with expectations for watermarking, privacy‑preserving architectures, and bias mitigation across the AI lifecycle. This approach matters for global tech companies and AI startups operating in or with India, as it turns one of the world’s largest digital markets into a testbed for “pragmatic” AI regulation that still foregrounds safety and rights.

Content authenticity, generative AI, and compliance by design

Across Europe, there is also a renewed focus on content authenticity and generative AI controls. EU initiatives to develop a voluntary code of practice for labeling AI‑generated content are intended to complement AI Act transparency rules, pushing platforms and model providers toward watermarking, provenance metadata, and user‑facing indicators that distinguish synthetic media from human‑generated content.

For product teams shipping generative AI features—especially in social media, advertising, and political communication—this means “compliance by design” will increasingly include synthetic‑content labeling, traceable model outputs, and safeguards against harmful uses like election interference or deepfake abuse. These obligations will likely become de‑facto global norms, as platforms choose uniform implementations rather than region‑specific behavior where possible.

What AI policy developments mean for tech leaders and teams

Taken together, the AI policy moves show a clear split: the US is leaning into AI deregulation and national infrastructure investment, while the EU, India, and others refine risk‑based frameworks that make AI governance part of everyday operations. For CTOs, CPOs, and founders, this means:

AI regulation is now a strategic constraint, not just a legal afterthought; decisions about where to host models, how to log decisions, and how to label content are increasingly dictated by law and standard‑setting.

“AI compliance” is converging with security and privacy disciplines, demanding cross‑functional teams, formal risk registers, and tooling for monitoring bias, safety, and traceability, particularly in high‑risk and regulated sectors.

The companies that adapt fastest—by building AI governance into their architecture, processes, and culture—will be best positioned to ship AI products that are not only competitive, but also deployable across the US, EU, India, and other key markets without constant re‑engineering for each new rule set.

Tech Scoop lands in the inboxes of 50,000+ tech leaders and engineers — the kind who build, ship, and buy.

No fluff. No noise. Just high-impact visibility in one of tech’s sharpest weekly reads.

👉 Interested? Fill out this quick form to start the conversation.

You can also check out our sponsorship page here. For other collaborations, feel free to send me a message.