|

The AI notepad for people in back-to-back meetings (Sponsor)

Looking for an AI notetaker for your meetings?

Granola is a lot more.

Most AI note-takers just transcribe what was said and send you a summary after the call.

Granola is an AI notepad. And that difference matters.

You start with a clean, simple notepad. You jot down what matters to you and, in the background, Granola transcribes the meeting.

When the meeting ends, Granola uses your notes to generate clearer summaries, action items, and next steps, all from your point of view.

Then comes the powerful part: you can chat with your notes. Use Recipes (pre-made prompts) to write follow-up emails, pull out decisions, prep for your next meeting, or turn conversations into real work in seconds.

Think of it as a super-smart notes app that actually understands your meetings.

Download Granola and try it for your next meeting.

Apple’s approach to artificial intelligence stands in stark contrast to the cloud-centric strategies of competitors like Google, OpenAI, and Microsoft. By prioritizing on-device processing, ironclad privacy, and custom M-series silicon, Apple is carving a path optimized for consumer trust and everyday utility rather than raw cloud scale.

This strategy, crystallized in Apple Intelligence, has forced rivals to adapt through partnerships, feature parity pushes, and hardware innovations as of early 2026.

Apple’s Core Pillars: On-Device, Privacy, Silicon

At the heart of Apple’s divergence is on-device AI. Unlike cloud-reliant systems that beam user data to distant servers, Apple runs lightweight foundation models directly on iPhones, iPads, and Macs. This delivers sub-second latency for tasks like text summarization, image generation, and notification prioritization—crucial for seamless user experiences without internet dependency.

This ties into a privacy-first positioning. Apple Intelligence processes sensitive data locally via the Neural Engine, with “Private Cloud Compute” handling overflow on anonymized servers that Apple cannot access. No training data is retained, addressing post-ChatGPT privacy scandals that eroded trust in Big Tech AI.

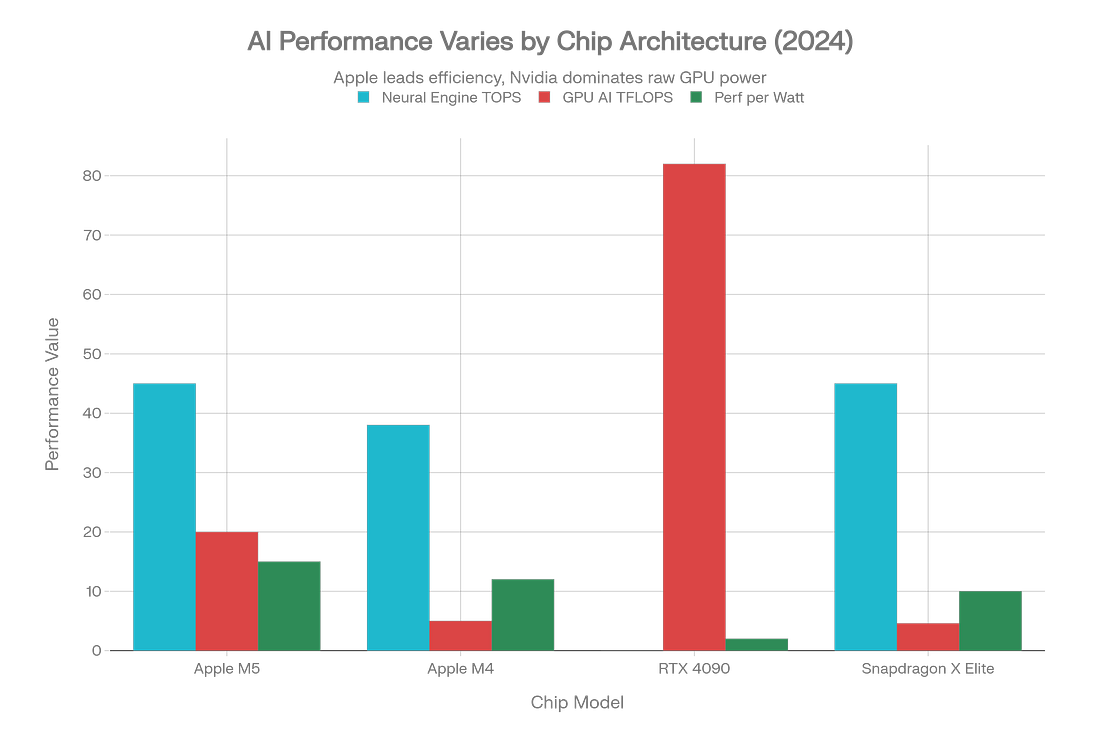

Powering it all is Apple’s M-series silicon advantage. The unified memory architecture and dedicated AI accelerators in chips like M4 and the October 2025-launched M5 enable massive efficiency gains. The M5’s 16-core Neural Engine hits around 45 TOPS (trillion operations per second), with 4x the GPU AI compute of M4 and 30% more memory bandwidth—all while sipping power for all-day battery life.

This chart illustrates Apple’s edge: M5 and M4 excel in Neural Engine TOPS and performance-per-watt, outpacing power-hungry Nvidia GPUs for mobile AI workloads.

These pillars aren’t just technical; they’re a moat. Apple’s ecosystem—1 billion+ active devices—creates a flywheel where AI enhances hardware stickiness, boosting services revenue without ads or data sales.

Recent Momentum and 2026 Roadmap

Apple Intelligence hit prime time in late 2025, expanding to all developers via the Foundation Models framework in June. This lets third-party apps tap on-device models for free (with opt-in cloud boosts), sparking an app ecosystem boom.

The M5 announcement marked a leap: enhanced Siri with conversational depth, multi-tasking, and multimodal inputs, rolling out spring 2026. Leadership hires like Amar Subramanya underscore bets on custom foundation models and safety guardrails.

Analysts forecast payoff in 2026. Apple’s “infrastructure-first” bet commoditizes cloud LLMs, positioning it for personal AI dominance. C-level trust in AI jumped 54% post-launch, validating the privacy play amid regulatory scrutiny.

Yet challenges linger: delayed features risked perceptions of lag, and Siri must prove less “hallucinatory” than rivals.

Competitor Responses: Partnership, Parity, Pivot

Rivals aren’t standing still. Google’s dual-track response exemplifies the scramble.

Google’s Hybrid Counterplay

In January 2026, Google unveiled “Personal Intelligence” in its Gemini app—a direct Apple Intelligence clone offering contextual task help, image gen, and proactive suggestions for U.S. subscribers first. This challenges Apple head-on in consumer AI, leveraging Gemini’s multimodal prowess.

Yet collaboration trumps pure rivalry. A January 11 multi-year deal integrates Gemini into Apple Foundation Models and Siri, enhancing complex queries while routing through Private Cloud Compute. Reports call it multi-billion-dollar, signaling Apple “ditching” prior OpenAI ties for Google’s scale.

This lets Google tap Apple’s ecosystem for cloud revenue while accelerating Gemini Nano on-device models for Android. Gemini now rivals Apple in Workspace integrations, narrowing the productivity gap.

OpenAI’s Hardware Gambit

OpenAI’s story is pivot-heavy. Its 2024 partnership embedded ChatGPT as an opt-in Siri sidekick—no data stored, user-initiated only. But by 2026, Apple shifted to Google, prompting OpenAI’s aggressive moves.

The $6.4B io acquisition and Jony Ive partnership aim at AI-native hardware challenging iPhones—think screenless, voice-first devices with edge-optimized models. OpenAI eyes smaller, deployable LLMs to mimic Apple’s on-device wins, though its cloud DNA creates friction.

Sam Altman has hinted at “device intelligence” revolutions, validating Apple’s thesis while racing to diversify beyond Azure dependency.