|

|

LLMs vs. LRMs: What’s the Difference and Why It Matters to Tech Writers

Understanding these approaches is now critical to documentation success

What is an LLM (Large Language Model)?

An LLM predicts the next most likely token based on patterns learned from massive amounts of text.

What it’s good at:

Fluent language generation

Summarizing, rewriting, translating

Answering questions that resemble things it has seen before

What it is not designed to do:

Prove correctness

Track rules or constraints reliably

Reason step-by-step unless prompted very carefully

Key limitation for technical documentation:

An LLM can sound right while being wrong — confidently.

This is one reason why LLM-only chatbots hallucinate procedures, mix product versions, and invent configuration options.

What is an LRM (Large Reasoning Model)?

An LRM is designed to reason, not just predict text. It explicitly performs intermediate steps such as planning, verification, constraint checking, and logical evaluation before producing an answer.

Common characteristics:

Multi-step reasoning

Internal validation of outputs

Better handling of math, logic, and procedures

More deliberate (and often slower) responses

Think of it this way:

LLM: “What sounds like the right answer?”

LRM: “What must be true for this answer to be correct?”

A current example category includes “reasoning models” like OpenAI’s o-series (for example, o1), though the industry is still settling on standard terminology.

Why Tech Writers Should Care (A Lot)

For a long time, documentation had a fairly predictable role. People searched it, skimmed it, and read it. If they misunderstood something, that misunderstanding usually stayed local. The consequences were real, but they were bounded by human interpretation.

That boundary no longer exists.

Today, AI systems sit between your documentation and your users. They answer questions, explain features, recommend actions, and onboard customers. Increasingly, they do not simply quote documentation. They reason over it. That shift fundamentally changes the stakes.

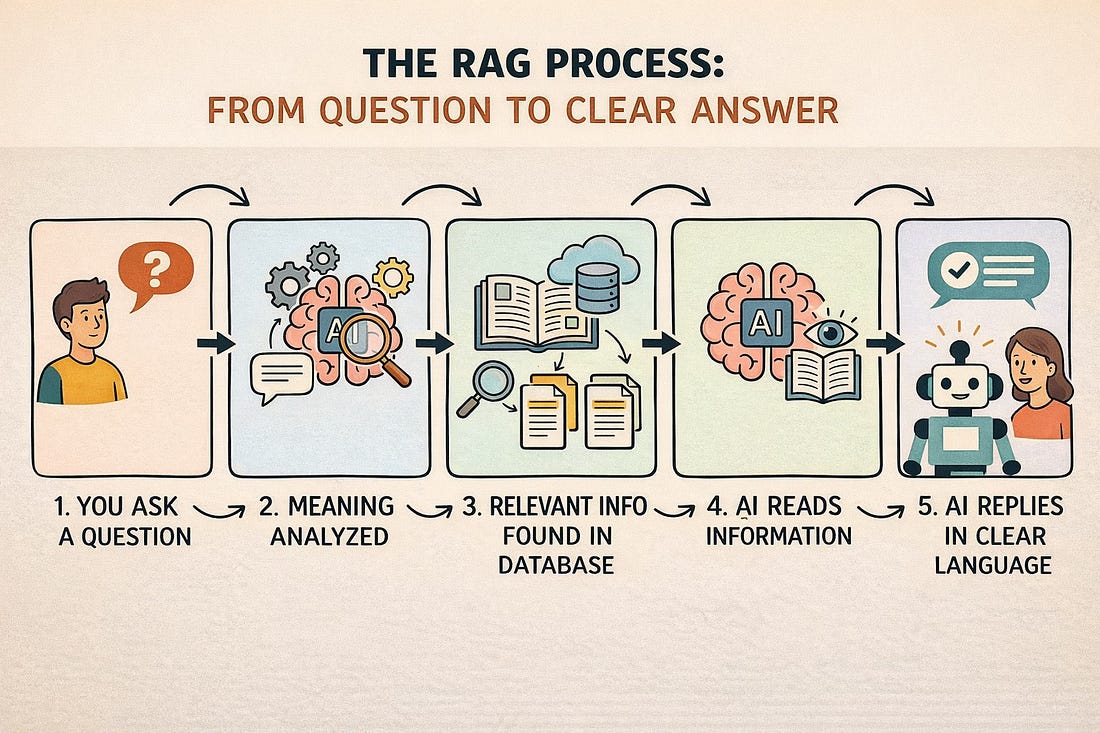

With traditional LLMs, poor documentation produced awkward or incorrect answers. With reasoning-oriented models, poor documentation produces incorrect decisions. A vague prerequisite, an implied condition, or an ambiguous rule is no longer just a writing issue — it becomes a logical flaw that propagates through automated systems. When an AI reasons from content that is incomplete or inconsistent, it fills in the gaps with confidence.

This is where the difference between “sounds right” and “is right” becomes painful.

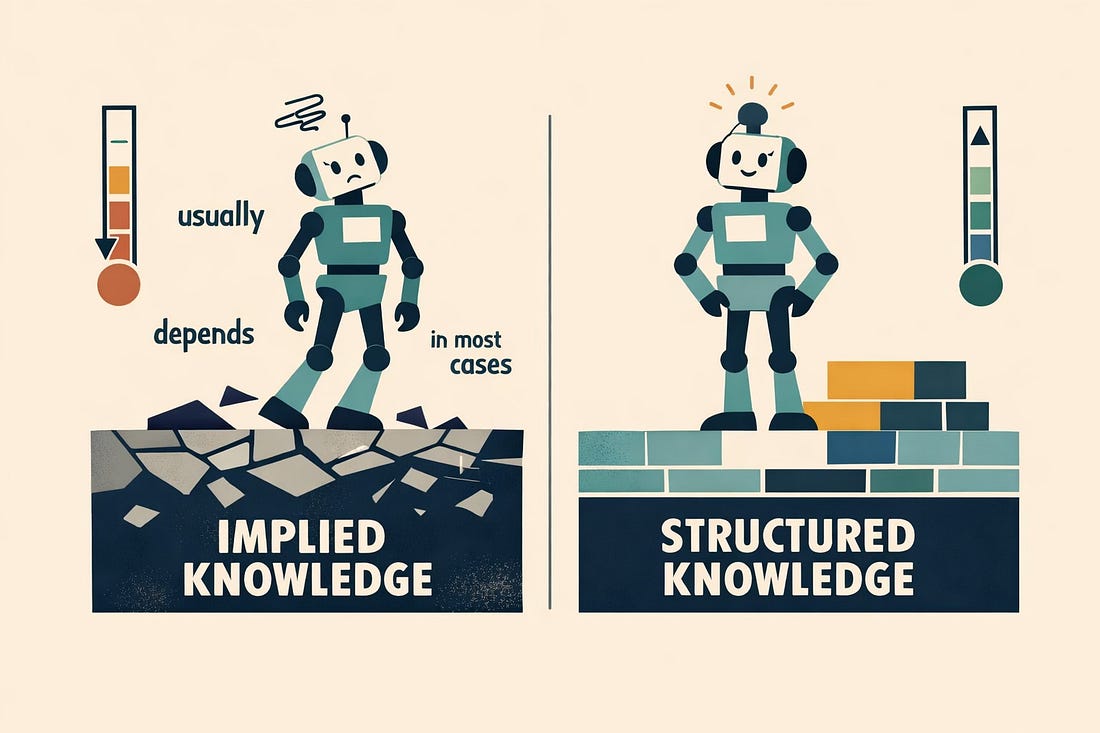

As LRMs come into play, documentation stops behaving like narrative text and starts functioning like a knowledge system. Models attempt to infer intent, reconcile contradictions, and determine applicability. If your content relies on human intuition — phrases like “usually,” “in most cases,” or “as needed” — the model has nothing solid to anchor to. Humans fill those gaps naturally. Machines guess.

That guessing erodes trust. And trust is now part of the product.

This also reframes what “AI-ready documentation” really means. Early conversations focused on whether chatbots could answer questions from docs. The more important question now is whether AI can reason correctly from those docs. That depends far less on elegant prose and far more on structure, clarity, and intent. Modular topics, explicit conditions, clean version boundaries, and clearly stated rules outperform beautifully written but loosely organized pages.

For tech writers, this is not a threat. It is a return to relevance.

As organizations realize that AI output is only as reliable as the knowledge beneath it, someone must own that knowledge. Someone must decide what is authoritative, what applies in which context, and what must never be inferred. That work does not belong to the model. It belongs to the people who design, govern, and maintain the content — tech writers.

In a reasoning-driven world, documentation is no longer passive reference material. It is operational input. It drives automated explanations, customer decisions, and support outcomes. When something goes wrong, leaders will not ask, “Why did the AI hallucinate?” They will ask, “Why did our knowledge allow it to?”

Tech writers are uniquely positioned to answer that question — and to prevent it from being asked at all.

The Practical Takeaway For Tech Writers

You do not need to become an AI engineer. You do need to write content that survives reasoning, not just reading.

That means: