|

Cut Code Review Time & Bugs in Half (Sponsored)

Code reviews are critical but time-consuming. CodeRabbit acts as your AI co-pilot, providing instant Code review comments and potential impacts of every pull request.

Beyond just flagging issues, CodeRabbit provides one-click fix suggestions and lets you define custom code quality rules using AST Grep patterns, catching subtle issues that traditional static analysis tools might miss.

CodeRabbit reviews 1 million PRs every week across 3 million repositories and is used by 100 thousand Open-source projects.

CodeRabbit is free for all open-source repo’s.

Cloudflare has reduced cold start delays in its Workers platform by 10 times through a technique called worker sharding.

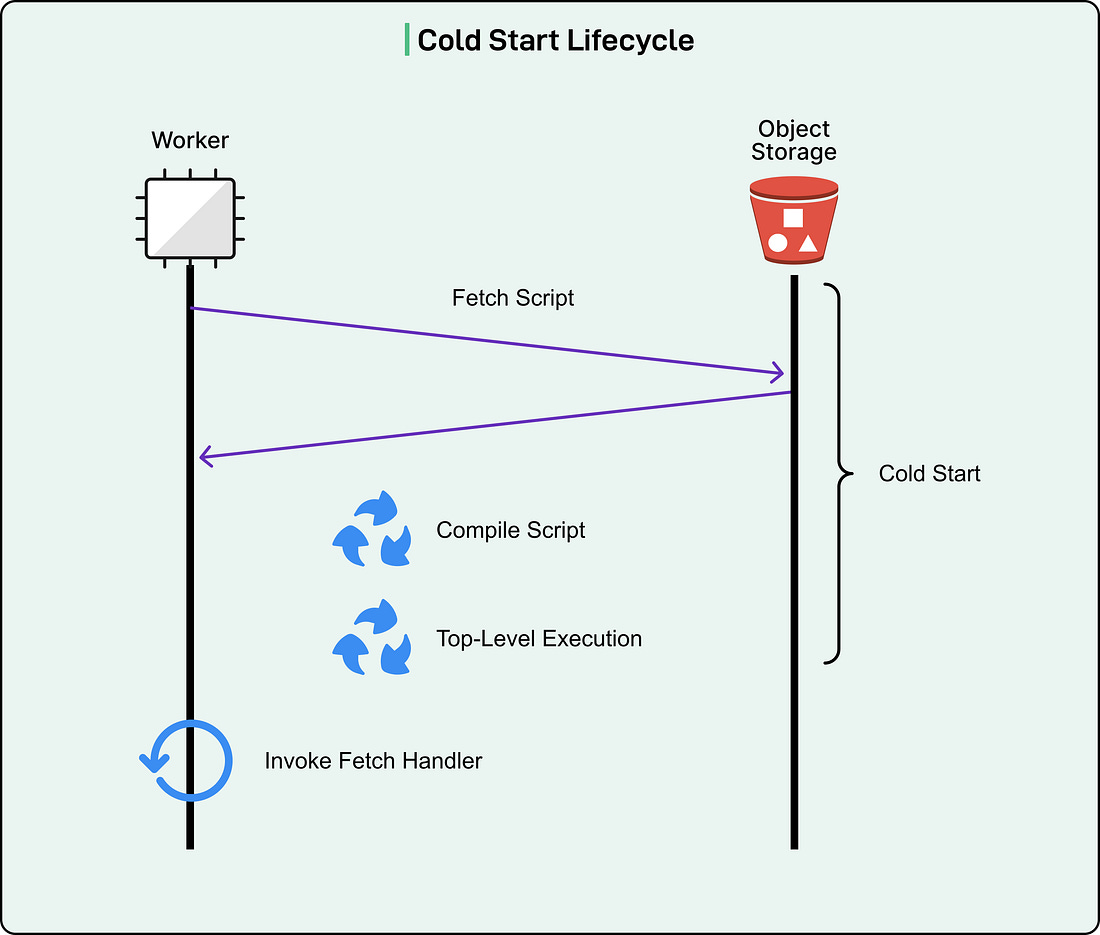

A cold start occurs when serverless code must initialize completely before handling a request. For Cloudflare Workers, this initialization involves four distinct phases:

Fetching the JavaScript source code from storage

Compiling that code into executable machine instructions

Executing any top-level initialization code

Finally, invoking the code to handle the incoming request

See the diagram below:

The improvement around cold starts means that 99.99% of requests now hit already-running code instances instead of waiting for code to start up.

The overall solution works by routing all requests for a specific application to the same server using a consistent hash ring, reducing the number of times code needs to be initialized from scratch.

In this article, we will look at how Cloudflare built this system and the challenges it faced.

Disclaimer: This post is based on publicly shared details from the Cloudflare Engineering Team. Please comment if you notice any inaccuracies.

The Initial Solution

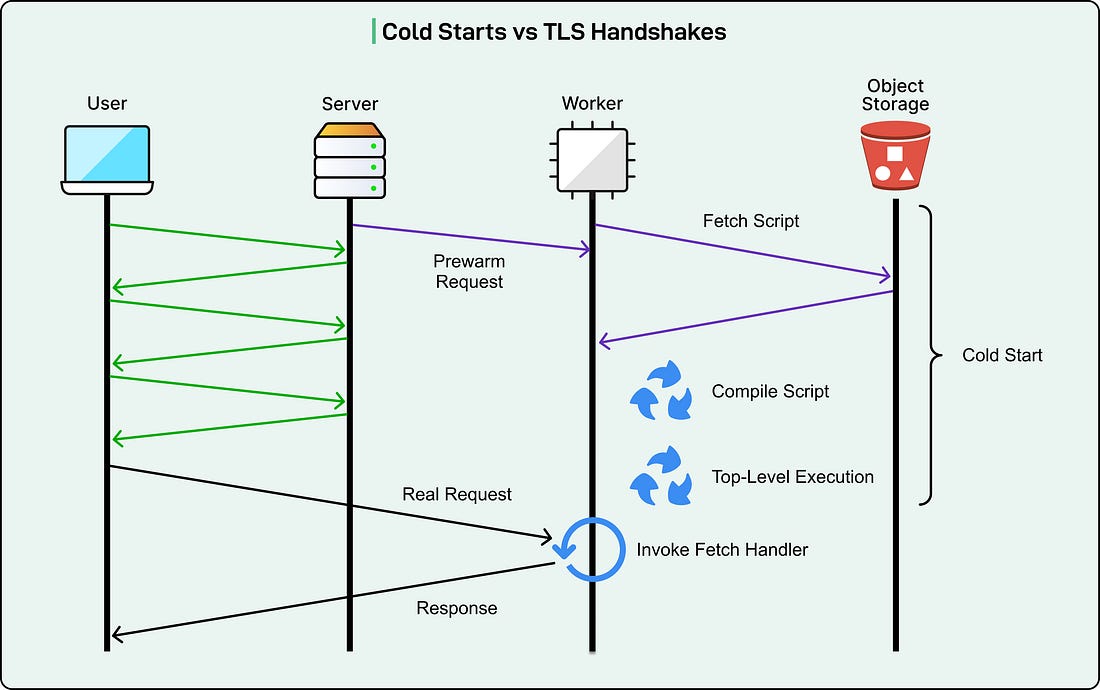

In 2020, Cloudflare introduced a solution that masked cold starts by pre-warming Workers during TLS handshakes.

TLS is the security protocol that encrypts web traffic and makes HTTPS possible. Before any actual data flows between a browser and server, they perform a handshake to establish encryption. This handshake requires multiple round-trip messages across the network, which takes time.

The original technique worked because Cloudflare could identify which Worker to start from the Server Name Indication (SNI) field in the very first TLS message. While the rest of the handshake continued, they would initialize the Worker in the background. If the Worker finished starting up before the handshake completed, the user experienced zero visible delay.

See the diagram below:

This technique succeeded initially because cold starts took only 5 milliseconds while TLS 1.2 handshakes required three network round-trips. The handshake provided enough time to hide the cold start entirely.

Why Cold Starts Became a Problem Again

The effectiveness of the TLS handshake technique depended on a specific timing relationship in which cold starts had to complete faster than TLS handshakes. Over the past five years, this relationship broke down for two reasons.

First, cold starts became longer. Cloudflare increased Worker script size limits from 1 megabyte to 10 megabytes for paying customers and to 3 megabytes for free users. They also increased the startup CPU time limit from 200 milliseconds to 400 milliseconds. These changes allowed developers to deploy much more complex applications on the Workers platform. Larger scripts require more time to transfer from storage and more time to compile. Longer CPU time limits mean initialization code can run for longer periods. Together, these changes pushed cold start times well beyond their original 5-millisecond duration.

Second, TLS handshakes became faster. TLS 1.3 reduced the handshake from three round-trips to just one round-trip. This improvement in security protocols meant less time to hide cold start operations in the background.

The combination of longer cold starts and shorter TLS handshakes meant that users increasingly experienced visible delays. The original solution no longer eliminated the problem.

Reducing Cold Starts Through Better Request Routing

Cloudflare realized that further optimizing cold start duration directly would be ineffective. Instead, they needed to reduce the absolute number of cold starts happening across their network.